Self-Driving Cars

People are so bad at driving cars that computers don’t have to be that good to be much better. — Marc Andreessen

Estimates suggest that 75% of all cars on the road will be self-driving in less than 25 years. And for about half the population, that is a terrifying prospect.

Estimates suggest that 75% of all cars on the road will be self-driving in less than 25 years. And for about half the population, that is a terrifying prospect.

When surveyed, about 55% of consumers said they would not consider purchasing a self-driving car, mostly due to fears about malfunctions or even electronic hijacking, i.e., fears regarding a loss of control.

Still, although it can be scary to hand the wheel over to the wheel itself, there are many positives that self-driving cars would bestow. For example, there would be less noise and congestion in cities; cars would be more fuel-efficient; the elderly would be individually mobile throughout their lives; and most importantly, experts conservatively estimate that traffic related accidents would be reduced by 90%.

So, what can we do to help people trust self-driving cars?

MAKING THEM HUMAN

The three-dollar word anthropomorphism refers to perceiving inanimate objects as human. And the more humanlike traits we ascribe to inanimate objects, the more we trust them. In fact, automakers have already been taking advantage of this psychological phenomenon, changing the physical design of their headlights to make them look more like human eyes.

The three-dollar word anthropomorphism refers to perceiving inanimate objects as human. And the more humanlike traits we ascribe to inanimate objects, the more we trust them. In fact, automakers have already been taking advantage of this psychological phenomenon, changing the physical design of their headlights to make them look more like human eyes.

So, what happens when you make a self-driving car more human?

Researchers at the Chicago business school brought participants into the lab for a simulated driving experience. In one condition, participants experienced a self-driving car simulation, where the car handled most of the driving. In the other condition, the self-driving car experience was almost identical, except this car was given a name (Iris), a gender (female), and it spoke to the participant in prerecorded sound bites.

Speaking of crashes, since 2009, Google’s self-driving cars have traveled over 1.3 million miles, from which, they’ve had 17 crashes; however, the crashes have all been the fault of human drivers.

THE PHILOSOPHY OF CRASHES

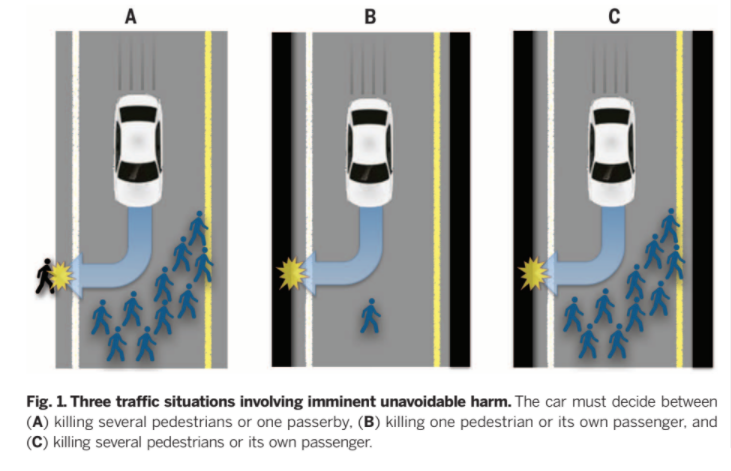

Let me pose you a question: Imagine someone is riding in a self-driving car, when suddenly, 10 pedestrians appear in the middle of the road. Should the car swerve to save the pedestrians (killing the passenger) or should the car keep its course (killing the pedestrians but saving the passenger)?

In recent research, 76% of participants thought it would be morally right to kill the passenger to save the 10 pedestrians. If instead, only one pedestrian would be killed, though, only 23% thought the car should swerve and kill the passenger instead.

In fact, the majority of participants thought we should design cars with this utilitarian consideration, i.e., design the self-driving car’s algorithm to save the most lives. However, even though participants supported designing self-driving cars in this fashion, only 21% of people said they would actually buy a car designed like this.

In other words, people think the car that sacrifices the driver to save the pedestrians is the most moral, but it’s not a car they would want for themselves or their family.

DRIVING FORWARD

Until, then, though if you’re having trouble trusting an inanimate object, try giving it a name and slapping some googly eyes on it. For if it worked for self-driving cars, then it should work for your other mechanical objects, too.

Self-Drivingly,

jdt

Everyday Psychology: One of the largest jobs in the US right now is truck driving. However, with the advent of self-driving cars, all those people will become unemployed. Moreover, all those truck stop towns (with all the restaurants and shops in them) will also become obsolete. And together, this will mean job losses for millions of Americans. Are there other areas you can think of that will likewise be affected by self-driving cars? Are there any ways we can mitigate these loses?

Bonnefon, J. F., Shariff, A., & Rahwan, I. (2016). The social dilemma of autonomous vehicles. Science, 352(6293), 1573-1576.

Waytz, Adam, Joy Heafner, and Nicholas Epley. “The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle.” Journal of Experimental Social Psychology 52 (2014): 113-117.