Scientific Survey Results: This Blog Is Awesome

We rarely think people have good sense unless they agree with us. — Francois de La Rochefoucauld

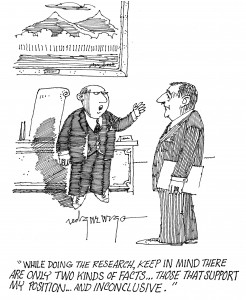

A foundational part of being a psychophilosopher is skepticism. Doubt. Mistrust in your senses, your thoughts, the two-week old chicken in the fridge that doesn’t look that moldy.

I’ve shown you how you can be wrong about probability estimates, about your perceptions of other people, about others’ perceptions of us! In fact, that source you trust the most should also be the one you question the most…

Me.

I mean science. No, I still mean me. Well, and science.

For today’s post, we’ll go with science.

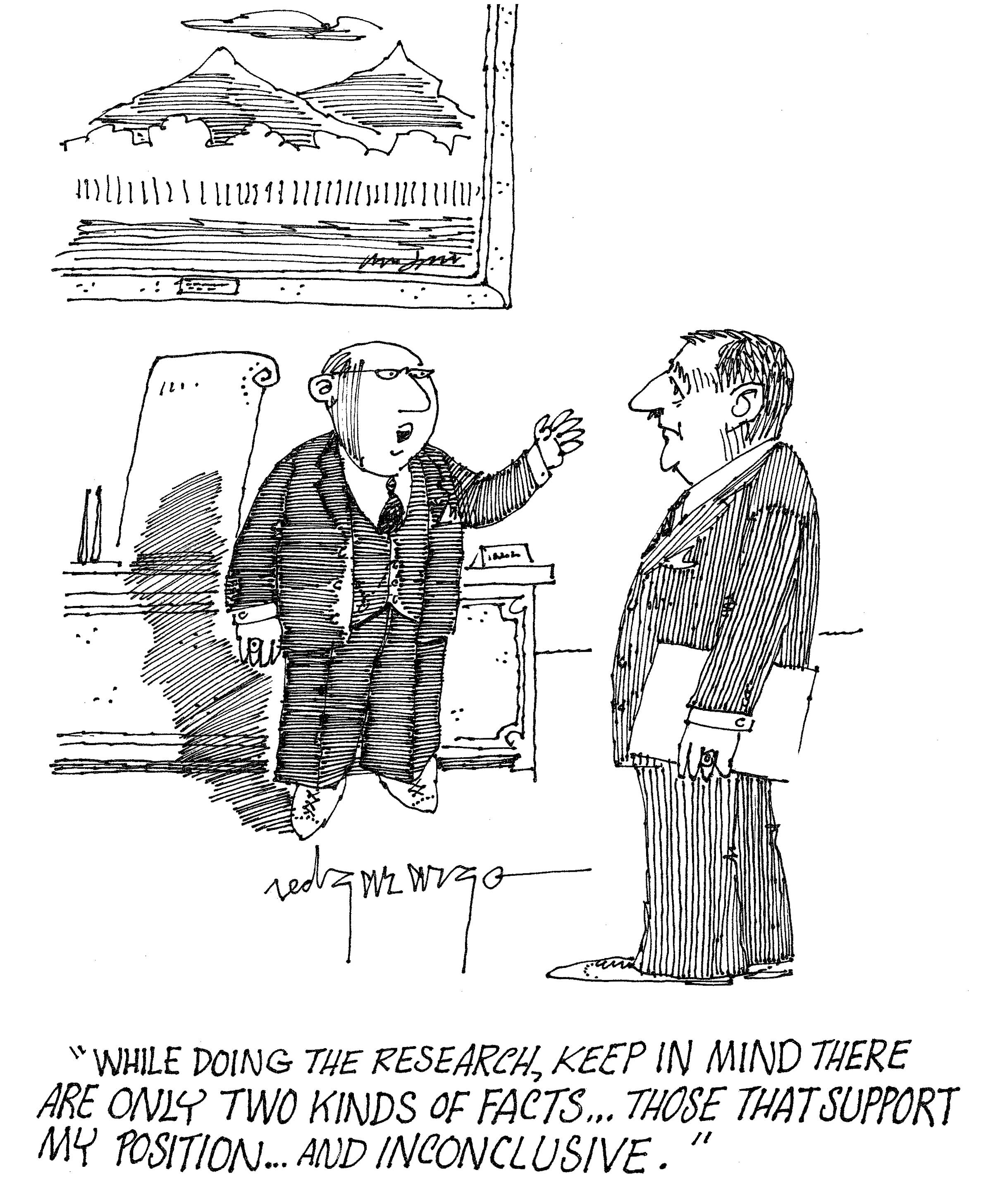

Although the scientific method is our greatest way of ascertaining truth, news outlets have a terrible knack for misinterpreting its findings (if you haven’t read my post about correlation, you definitely should).

Today, I want to talk about another issue with the media’s portrayal of science: polling.

Because we are unable to poll every person in the world, surveys rely on getting a smaller “sample” of the population in hopes that the effects we find here (in this “representation” of the overall population) will average out to what we would find if we did in fact poll the entire population.

However, one thing budding psychophilosophers should ALWAYS be on the lookout for is where these samples were drawn from.

For example, let’s say I’m trying to figure out if the American population prefers coffee or tea. Because it would be impossible for me to poll every person in the United States, let’s say I go to the local retirement home and ask everyone there.

If I looked at my data and told you “75% of the US population prefers tea to coffee” would you believe me?

I sure hope not. But why?

Well, maybe there’s a sample bias. That is, maybe older people are simply more likely to prefer tea compared to the general US population.

And this is exactly what you have to question whenever news outlets provide “data” from their polls.

For example, if a news outlet is known to attract liberal viewers, and then that news outlet hosts a survey on their website, what type of people are going to their website in the first place, and then later fill out the survey? Likewise, if a conservative news outlet hosted their own online survey, the responses are presumably going to have a conservative outlook, thus failing to provide an equal representation of the US opinion.

In general, you always want to question who the survey responses are coming from. For if they are composed of a particular “type” of person, these responses are likely biased in that direction.

Thus, if I gave a survey on this site about how much you like my posts, it would clearly show a bias toward “they’re the greatest thing ever”—why else would you keep coming here to read them?

Unless of course you still haven’t figured out how to unsubscribe.

Pollingly,

jdt

You are absolutely right, young psychophilosopher.

Not just the demographics of the participants (age, gender, status, wealth, height, weight, ethnicity, sexual orientation, teeth no teeth, etc.), but how are the questions framed (the wording, the structure, answers to be based on emotion or logic, likely voters compared to registered voters compared to non-voters, liberal vs. conservative vs. independents, etc.), as well as, the environment of the country or world at the time, etc.

Be suspect of polls and the information given, unless you have done your research. This is true with all things, from global warming to butter vs. margarine.

I’m glad to hear you agree! Usually, if you can truly “randomly” select participants, any individual differences won’t matter because–statistically speaking–randomness should mean both groups are even. However, true randomness if rarely (if ever) possible and thus such skepticism is founded on commendable logic!