Robot Psychology

We’re fascinated with robots because they are reflections of ourselves. — Ken Goldberg

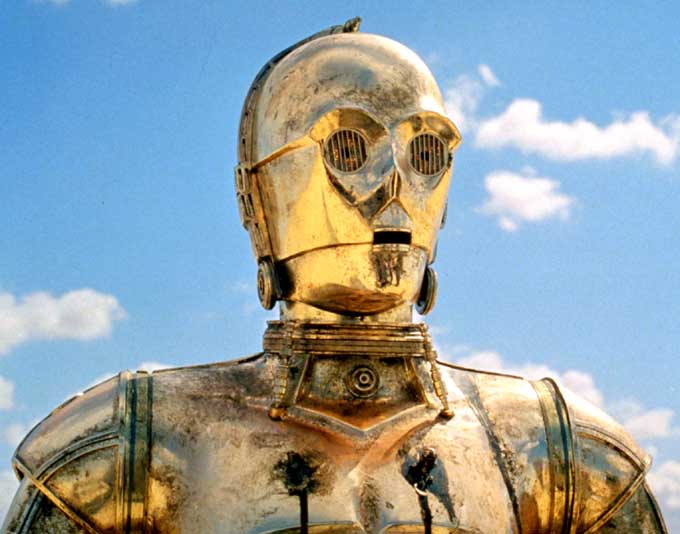

If you watch The Terminator, robots aren’t really that great to humans—unless being besties in the future entails enslavement and eradication. Indeed, most movies with “sentient” robots paint them somewhat malevolently (e.g., The Matrix; I, Robot; all of the other Terminator movies).

In our own reality, though, even Bill Gates, Elon Musk, and Stephen Hawking caution against the development of artificial intelligence. Before the technology gets ahead of us, this Brain Trust wants to ensure that we can control it—not only in a physical sense but in a “social impact” kind of way, too.

For example, as cars become automated, the occupation of truck driver will become obsolete. Immediately, that’s around 3.5 million jobs lost—not to mention the impact on the millions of others who depend on those paychecks. For example, all of those small towns across the world that have begun and currently exist because of truck stops—when truckers go, so too will they.

Thus, even if robots don’t rule over us directly, they can still topple societies if developed and implemented carelessly.

But what if robots do take a more science fiction-based route? This new superior “species” subjugating humans to the animal-status that humans have done to all animals beneath them?

Well, let’s talk some robot psychology.

First, in order for artificial intelligence to “pass” as human, the robot should be able to pass the Turing Test. The test works like this: an “interrogator” blindly communicates with both a human and a robot; and after multiple five minute-rounds, if the interrogator mistakes the robot as human for more than 30% of the trials, the robot wins.

Not until 2014 did a computer first pass this test; however, there were some caveats. First, the robot was billed as a 13-year-old boy—which set the bar for “human authenticity” relatively low (hey, we all remember what being 13-years-old was like). But second, the validity of the Turing Test itself has been criticized, a number of scholars feeling it’s not rigorous enough.

Indeed, one area the Turing Test explicitly doesn’t test is how human the robot looks—which may have more importance than you think.

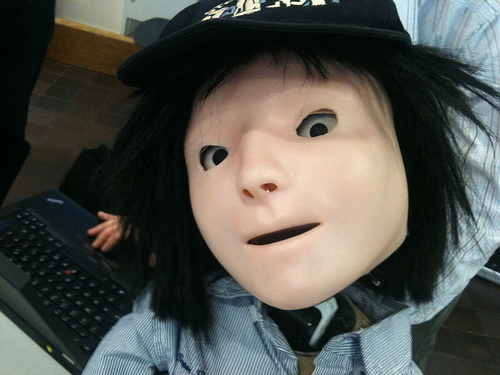

In 1970, a researcher suggested that “an increasingly humanlike appearance [of robots] would lead to increased liking up to a point, after which robots appearing too human would become unnerving” (p. 125). And this unease elicited by human-likeness is known as the uncanny valley.

Why this “uncanny valley” exists has fascinated researchers, and a few years ago, they found a solution: faces that look too human are creepy because it seems like those faces have minds.

To demonstrate this, researchers filmed a robot interacting with another human from one of two angles. In the first angle, the camera was behind the robot so viewers could only see the wires and circuits of the robot. In the other angle, the camera was in front of the robot, so viewers could see its human-looking face.

Researchers then found that those shown the robot’s face (vs. its wiry back) expressed greater endorsement that the robot had a mind, which subsequently made participants more creeped out by it.

So when the robots one day do take over, try not to stare at their weirdly plastic noses. Even a robot can tell when you’re gawking.

Anti-robotically,

jdt

Everyday Psychology: What’s more threatening: A bunch of different looking robots all organized in lines, or a bunch of the exact same robot all organized in lines? When robots are all the same (like in the second case) we perceive them to have more “groupiness” or “cohesiveness,” which makes them seem more threatening (e.g., like encountering an army). When we start to incorporate robots more so into our lives, we’ll have to evaluate even minor considerations like their aesthetic design. If you were designing the robot that would work and clean your house, how would you want it to look?

Gray, K., & Wegner, D. M. (2012). Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1), 125-130.

How do you know my comments aren’t just from a robot?

I’m loving this fiction project I’ve been working on, I’ll have the first few chapters finished soon and would love to forward them to you. How’re all the other writing projects been going?

You’re so prolific, the Asimov of the Basketball Court

Haha I should have one of those “captcha” programs on here just to make sure you’re real…though judging by the complexity of your response, I’ll go with human 😉 –however, there are some pretty amazing news “bots” that generate stories difficult to distinguish from human authors.

But yes!! Please send me those chapters. I would love to take a look at them 🙂 As for the title bestowed, it is too generous for my actual skill set. Still, if you’re willing to call me that…